Performance

Load test results

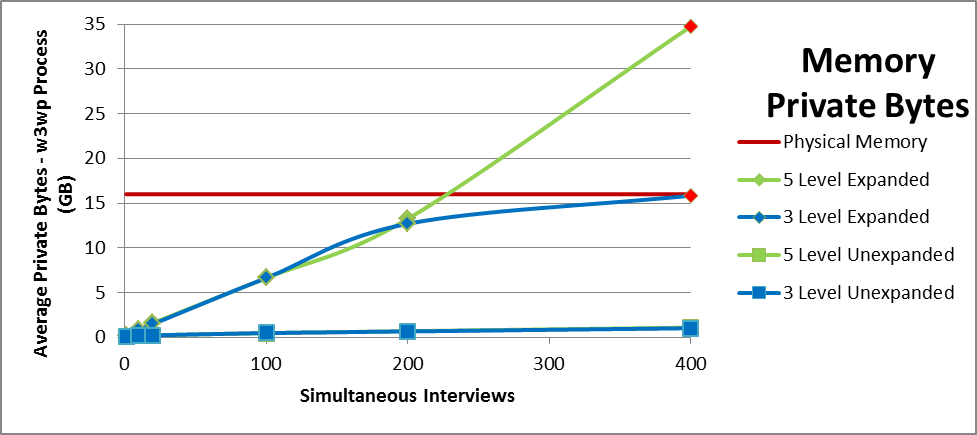

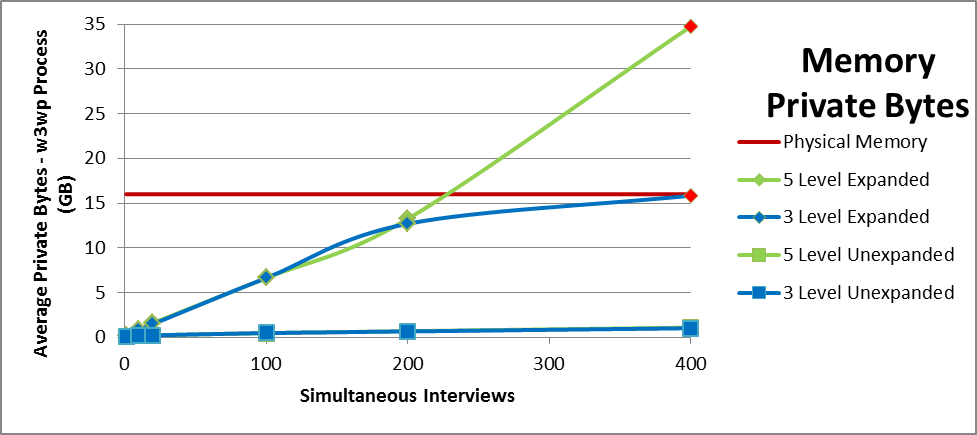

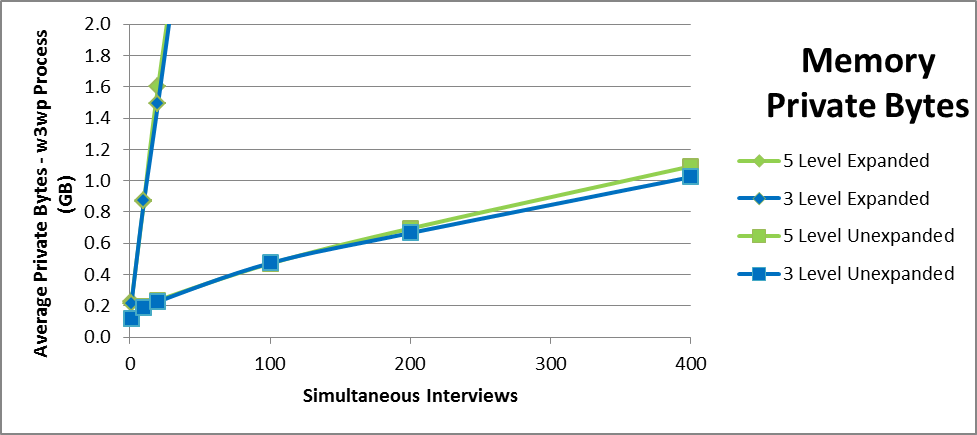

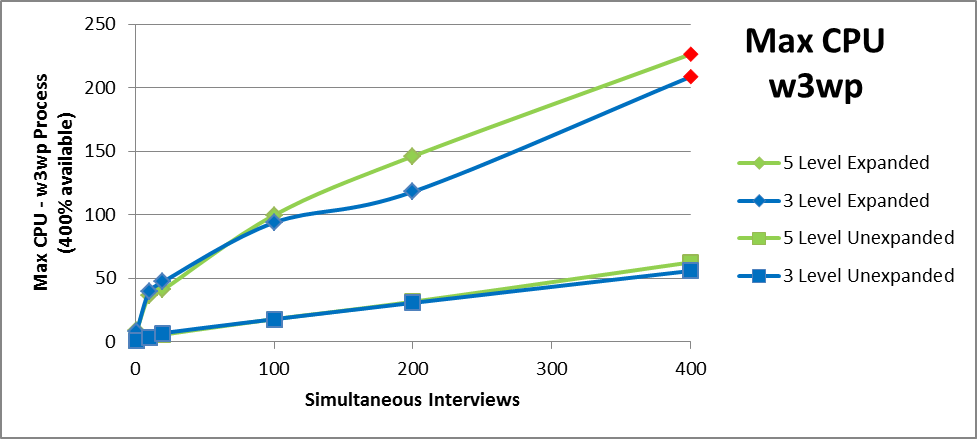

Load tests were performed using three and five level loops both expanded and unexpanded to investigate performance differences based on level and loop type. Tests were performed with 1, 10, 20, 100, 200, and 400 simultaneous interviews. The survey metadata files used for testing are described in

Test setup and included in the DDL.

Note The 400 simultaneous interviews load test failed with 503 errors for both the 3 level and 5 level expanded loop projects. These tests consumed all 16GB of memory before the tests completed.

Memory

The memory chart shows that expanded loops require much more memory than unexpanded loops. The private bytes for the w3wp process hosting the engine exceed 5 GB for the expanded loops even at a load of 100 simultaneous interviews. The 3 level loop flattens out at around 16 GB while the 5 level loop continues to use more memory. Note that the test machine has 16 GB of RAM resulting in issues completing the 400 simultaneous interview tests due to excessive memory usage. The red data points mark failed tests.

The difference in depth of the loop is insignificant for memory usage. The two lines for unexpanded loops are on top of each other.

Expanding the lower load areas of the chart, we can see that the memory rises significantly even with smaller loads. The difference between the 5 level tests and the 3 level tests is trivial in comparison.

CPU

The average CPU chart shows similar results to the memory charts. Expanded loops average more CPU than unexpanded.

The average CPU drop off for the 400 simultaneous interview test is probably due to failures caused by memory exhaustion.

Average CPU usage as a percentage of available CPU suggests that memory is the limiting resource rather than CPU. However, max CPU spikes indicate that CPU is just as much of an issue. The 5 level expanded test takes 100% of a single core at only 100 simultaneous interviews.

Respondent perceived times

The average time to start an interview is very close to the goal even for the expanded tests. The maximum time (table not included) is also close the goal of 4 seconds for expanded as well as unexpanded.

Average time to start interview for different loads: Goal < 1 sec

| | Simultaneous interviews |

|---|

| | 1 | 10 | 20 | 100 | 200 | 400 |

|---|

Expanded | 5 Level | 1.34 | 1.11 | 1.09 | 1.12 | 1.10 | |

3 Level | 1.23 | 1.02 | 0.99 | 1.00 | 0.98 | |

Unexpanded | 5 Level | 0.40 | 0.14 | 0.12 | 0.11 | 0.11 | 0.10 |

3 Level | 0.39 | 0.17 | 0.16 | 0.14 | 0.14 | 0.13 |

The average page to page time is well under the goal even for the expanded tests. The maximum time is also close the goal of 2 (chart not included with a very small percentage of anomalies.

Average page to page time for different loads: Goal < 1 sec

| | Simultaneous interviews |

|---|

| | 1 | 10 | 20 | 100 | 200 | 400 |

|---|

Expanded | 5 Level | 0.04 | 0.03 | 0.03 | 0.04 | 0.04 | |

3 Level | 0.05 | 0.04 | 0.04 | 0.04 | 0.04 | |

Unexpanded | 5 Level | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 |

3 Level | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 |

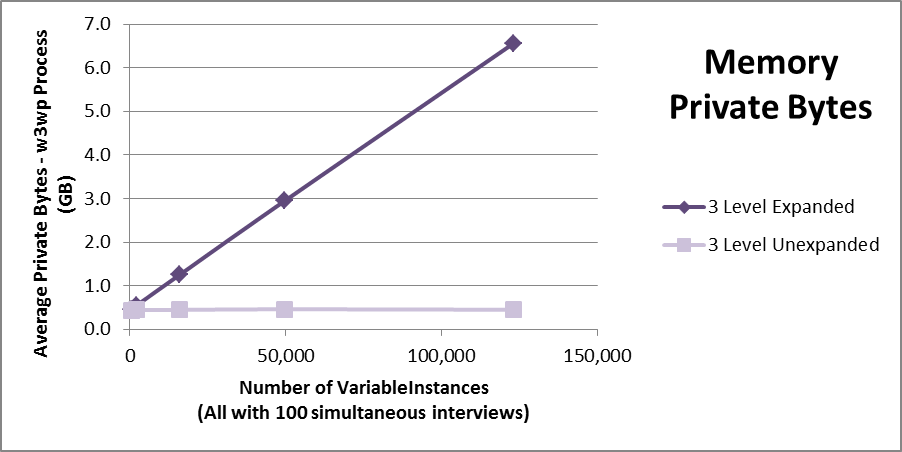

Results of tests using differing numbers of variables

Tests were performed using differing numbers of variables with both expanded and unexpanded loops to investigate resource utilization differences. The following tests discuss the number of VariableInstances based on the VariableInstances in the expanded loop project since the unexpanded loop project will have very few VariableInstances with the same number of variables. Tests were performed with 390, 2,255, 15,830, 49,710, and 123,130 variables.

Memory

This chart shows the changes in memory usage for different numbers of variable instances. The existing guideline of 20,000 variable instances translates to about 1.5 GB of memory usage for the expanded project. This seems a reasonable maximum and guideline.

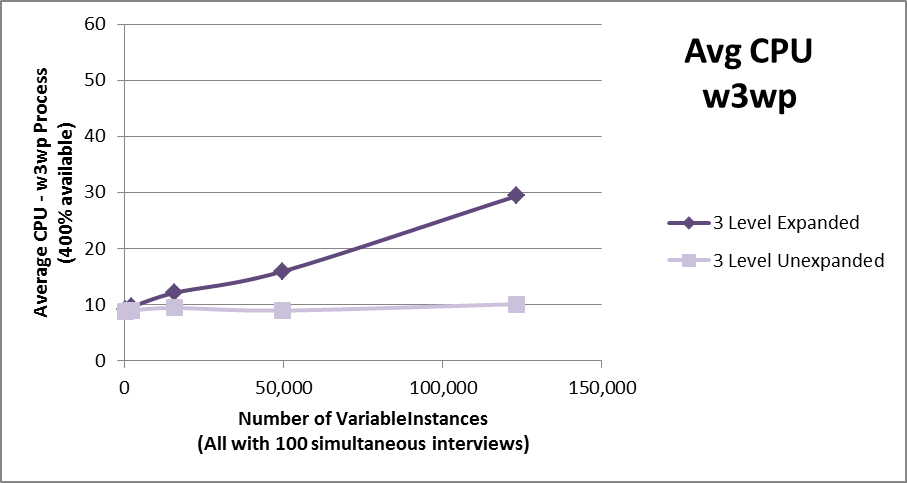

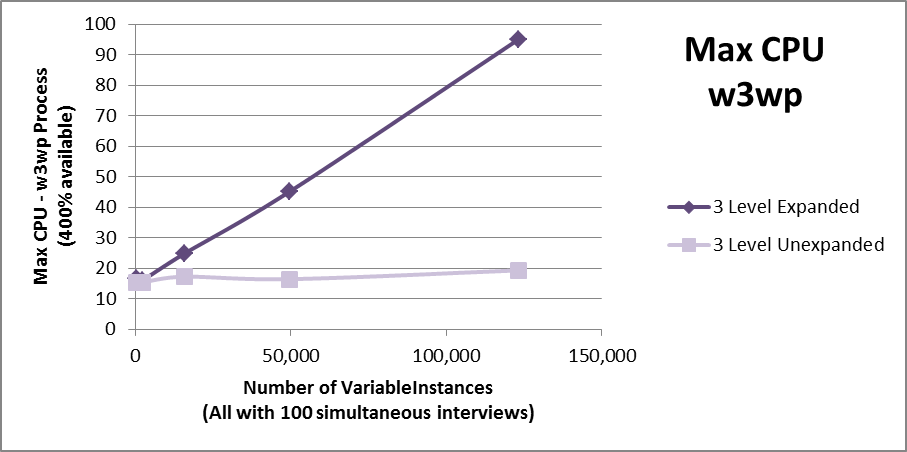

CPU

This chart shows the changes in CPU usage for different numbers of variable instances. The CPU usage shows only a 20% CPU usage difference across the range of variable instances. This seems less significant than the 6 GB memory usage difference.

However, when we view the max CPU usage we see a 75% CPU usage difference for the largest expanded test vs. the unexpanded test. The average CPU requirements might not be that different for expanded vs unexpanded loops, however more CPU is required to handle the larger maximum spikes for expanded loops.

Respondent perceived times

The time to start an interview and page to page time are acceptable across the range of variable instances. However somewhere between 15,830 and 123,130, the start time increases to be very near the limit. This result reinforces the guideline of 20,000 maximum variable instances.

Average time to start interview for different numbers of VariableInstances: Goal < 1 sec

| | Number of VariableInstances

(100 simultaneous interviews) |

|---|

| | 390 | 2,255 | 15,830 | 49,710 | 123,130 |

|---|

Expanded | 3 Level | 0.12 | 0.14 | 0.23 | 0.45 | 1.01 |

Unexpanded | 3 Level | 0.12 | 0.12 | 0.13 | 0.13 | 0.15 |

Average page to page time for different numbers of VariablesInstances - Goal < 1 sec

| | Number of VariableInstances

(100 simultaneous interviews) |

|---|

| | 390 | 2,255 | 15,830 | 49,710 | 123,130 |

|---|

Expanded | 3 Level | 0.03 | 0.03 | 0.03 | 0.03 | 0.04 |

Unexpanded | 3 Level | 0.03 | 0.03 | 0.03 | 0.03 | 0.03 |

Single respondent tests

These tests measure the resource usage for a single respondent with varying numbers of iterations. The tests were run manually using the following script. Each test is started after an iisreset so the project caching is included and consistent. The test also checks the impact of a restart after step 6.

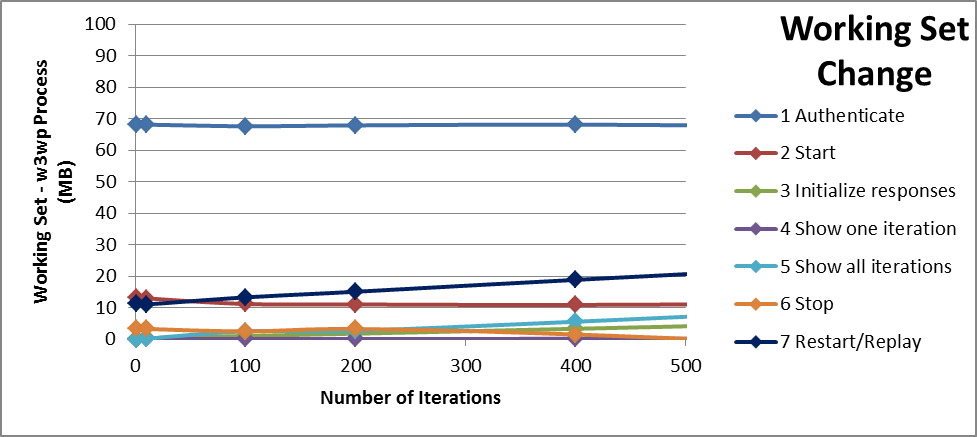

This chart shows the difference in Working Set memory at each step of the survey. The “1 Authenticate” step shows a large jump in memory due to the loading of the survey however this memory allocation is not impacted by the number of iterations chosen.

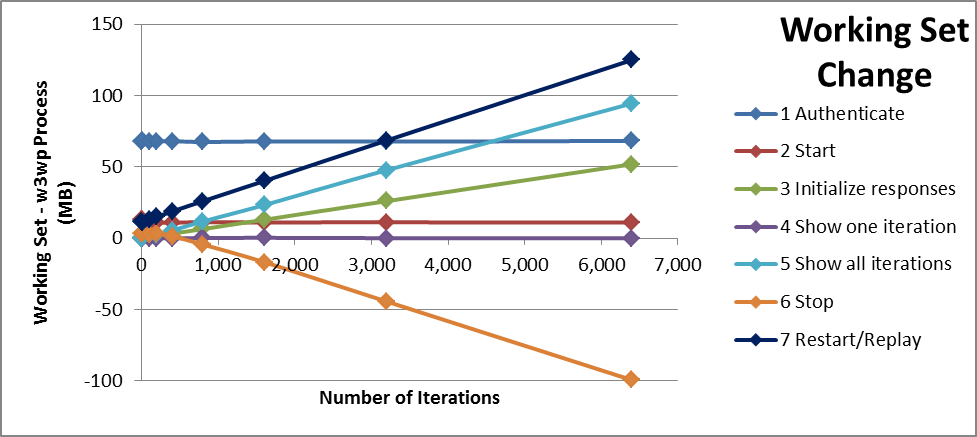

The chart shows that the number of iterations has a large impact on “7 Restart/Replay” attributed to replaying more iterations. There is also a large impact on “5 Show all iterations”. As expected, there is more work done to setup and display more iterations. The 12,800 VariableInstance results are not displayed because that test could not run to completion. The test failed on the “3 Initialize responses” step with a “The service is unavailable.” error displayed to the respondent. This test did not reach the steps 5 or 7 where the largest memory usage was measured.

The size of the loop has no impact on “1 Authenticate”, “2 Start”, or “4 Show one iteration”.

The “6 Stop” step releases all the memory used in previous steps so is negatively correlated with the number of iterations.

This memory usage might not appear significant in this chart. However it should be remembered that this is a single respondent test. This memory usage would be multiplied by each additional respondent.

However, thousands of iterations might be excessive. Expanding the lower regions of the chart to show only up to 500 iterations makes this memory difference insignificant up to about 400 iterations.

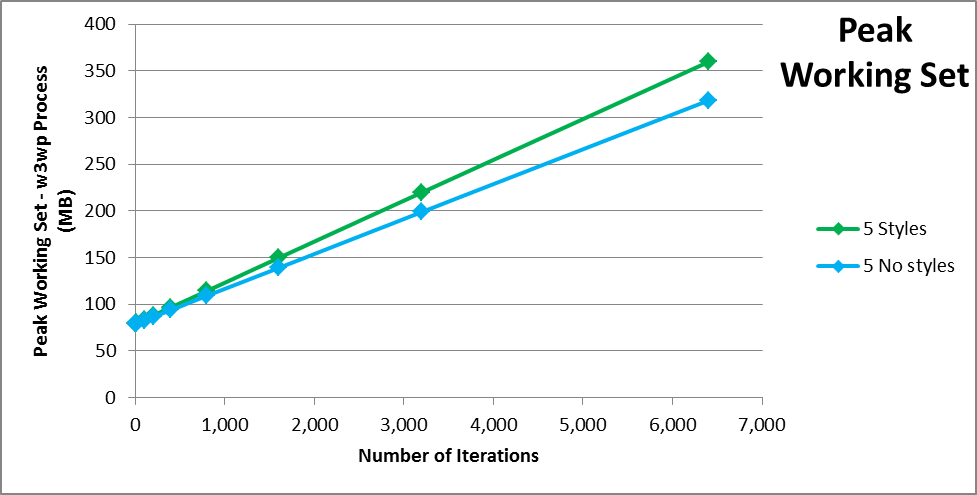

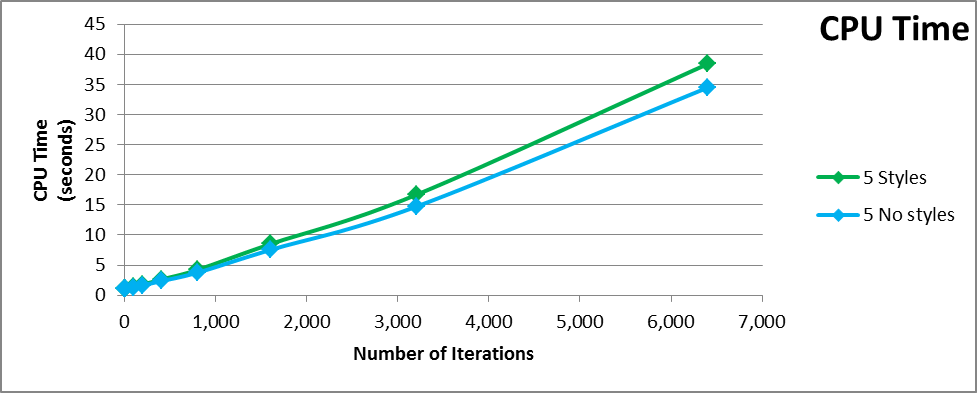

The second test checks the impact of styles. At step 5, we either show all iterations, or show all iterations with styles. Styles have only a small impact on both memory and CPU. This memory chart shows total memory in use at this step rather than relative memory like the preceding chart.

Next

See also